Your Network's Edge®

Blog Post

You are here

New Trends in AI and Machine Learning for Anomaly Detection

Anomaly detection is a crucial task in many fields, including cybersecurity, networking, finance, healthcare, and more. It identifies data differing from previous observations, for example, deviations from normal, expected or likely probability distribution, or from the shape and amplitude of a signal in a time series.

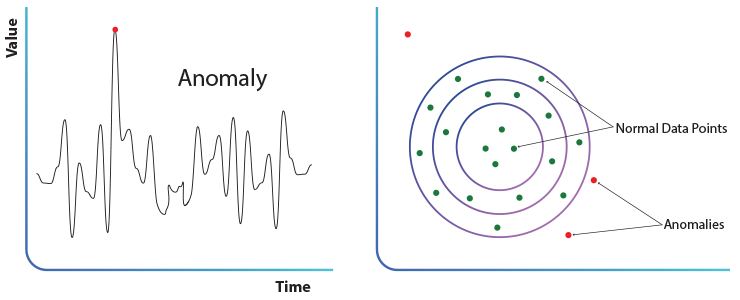

Below we can see examples of anomaly in data probability distribution and of time series anomaly:

Network Anomalies

In the networking world, an anomaly is a sudden, short-lived deviation from normal network operation. Some anomalies are deliberately caused by intruders with malicious intent, for example a denial-of-service attack in an IP network, while others may be purely accidental such as changes in packet transfer rate (throughput) due to various, unintended, reasons. In both cases, quick detection is needed to initiate a timely response. Because network monitoring devices collect data at high speeds, an effective anomaly detection system therefore involves the extraction of relevant information from a voluminous amount of high-dimensional, noisy data. Different anomalies are expressed in the statistics of the network in different ways, so it is difficult to develop general models or rules for the behavior and normal anomalies in the network. Furthermore, model-based algorithms are not transferrable between applications, and even small changes in network traffic or the physical phenomena being monitored can make the model inappropriate. Instead, non-parametric learning algorithms that are based on machine learning principles can learn the nature of normal measurements and self-adapt to variations in the structure of ”normality”. In other words, "rigid' models are not fit for the task of detecting network anomalies but algorithms that are based on machine learning (ML) that learn user behavior are better suitable to tackle this problem.

While anomalies can be found in many fields, the ways to address them are very similar.

One of the major challenges in anomaly detection is the difficulty of clearly distinguishing between normal and abnormal behavior, as in some applications the boundary between the two is usually not precise and evolves over time. Furthermore, anomalies are often rare events, so the normal cases are marked differently form the anomalous cases. As a result, semi-supervised or unsupervised learning is used more frequently than supervised learning, as explained below.

Semi-supervised and Non-supervised Learning

In a semi-supervised approach to anomaly detection, it is assumed that the training dataset contains only normal-class labels. The model is trained to learn the normal behavior of a system and then used in the testing phase to detect anomalies.

On the other hand, with unsupervised learning techniques, the basic assumption is that outliers (data points that differ significantly from other observations) represent a very small fraction of the total data. Although anomaly detection is often based on unsupervised learning, it can be of significant help in building supervised predictive models.

There are three main types of anomalies:

Point anomalies. A data point that differs remarkably from the rest of the data points in the dataset considered.

Contextual anomalies. The anomaly of a data point is related to the location, time or other contextual attributes of other points in the dataset. For example, it is reasonable to assume that water consumption in households is much higher at night during a weekend, compared to midday in a workday.

Collective anomalies. When a group of points are treated as an anomaly on the whole, but specific data points in that group are considered normal. Collective anomalies can only be detected in datasets where the data is related in some way, i.e., sequential, spatial or graph data.

There are several Machine Learning approaches to find anomalies in data. Isolation Forest, Local Outlier Factor (LOF), and clustering algorithms are all commonly used for anomaly detection.

One commonality among these methods is that they are unsupervised learning algorithms, meaning that they do not require labelled data to identify anomalies. Instead, they analyze the inherent structure of the data to identify patterns and deviations that may indicate anomalies.

Another commonality is that they all use the concept of distance or dissimilarity between data points to identify anomalies. Isolation Forest and LOF use distance metrics to measure how isolated or different a data point is from the rest of the data, while clustering algorithms use distance metrics to group similar data points together.

Overall, while each method has its own unique approach to anomaly detection, they share these commonalities and can be used in conjunction with each other to improve the accuracy of anomaly detection in a given dataset.

Deep Learning Techniques for Anomaly Detection

The issue of finding anomalies is of such importance, that tech giants such as Google, Amazon and Facebook have all developed their own algorithms to address it. Amazon has developed the rrcf algorithm, which is an improvement of the Isolation Forest algorithm. Google and Facebook each developed an algorithm for time forecasting (prediction), which is another way to find anomalies in time series. It assumes that the future can be predicted based on historical events and a deviation from the predication is recorded as an anomaly. Prophet and Neural Prophet are time series forecasting algorithms developed by Facebook's Core Data Science team. One of the main advantages of the Prophet algorithm is its ability to handle a variety of time series patterns, including trends, seasonality, and holiday effects. Facebook’s approach – also known as a “decomposable model” - refers to a series as a combination of several components. Prophet algorithms apply a decomposable model with three main components: trend, seasonality, and holidays, each of which can be modeled using different techniques. Google's AutoML Tables is an end-to-end machine learning platform that provides automatic feature engineering (selecting the right features for the ML model and preparing them to improve the performance of the model) and model selection. It includes time series forecasting capabilities, allowing users to upload time series data and generate forecasts with just a few clicks.

Deep learning has become a popular approach for anomaly detection in time series data. One of the most advanced techniques is the use of Variational Autoencoders (VAEs).

VAEs are a type of generative model that learns to encode high-dimensional data into a lower-dimensional latent space (e.g., watching a 3D movie on a 2D TV) and decode it back into the original space. In the context of anomaly detection, VAEs can be used to reconstruct normal time series data, and then identify any data points that deviate significantly from the normal distribution as anomalies.

Another advanced deep learning technique for anomaly detection in time series data is the use of Generative Adversarial Networks (GANs). GANs are a type of generative model that consists of two neural networks: a generator that produces fake data, and a discriminator that tries to distinguish between real and fake data. In the context of anomaly detection, GANs can be used to generate synthetic time series data based on normal data, and then identify any real data points that deviate significantly from the synthetic data as anomalies.

Finally, Deep Support Vector Data Description (Deep SVDD) is another advanced deep learning technique for anomaly detection in time series data. Deep SVDD learns to map the time series data into a high-dimensional space, and then uses a Support Vector Machine (SVM) to learn a hyper-sphere )a three-dimensional structure) that encompasses the normal data. Any data points that fall outside the hyper-sphere are considered anomalous.

Overall, VAEs, GANs, and Deep SVDD are some of the most advanced deep learning techniques for anomaly detection in time series data, and each has its own advantages and limitations depending on the specific data and use case.

Since anomalies affect many areas, anomaly detection has gained increased importance and efforts are constantly being made to develop algorithms that will be as fast, robust and accurate as possible.